Create a Specialist Chatbot with a Modern Toolset: Streamlit, GPT-4 and the Assistants API

With Streamlit’s sophisticated chat interface, a powerful GPT-4 backend and the OpenAI Assistants API, we can build pretty much any specialist chatbot

With the right tools — Streamlit, the GPT-4 LLM and the Assistants API — we can build almost any chatbot.

Streamlit combines the power of Python programming with an easily constructed chat interface to provide the ideal front end to an AI-based application;

GPT-4 is the most powerful LLM from OpenAI, so far;

and the Assistants API provides the link between the two, controlling the behaviour of the LLM, organising the communications between the front and back ends, as well as providing access to tools such as code interpretation and data retrieval.

The combination of these three elements gives us an extremely powerful framework for developing specialist chatbots.

The possibilities are endless! For example:

a company could upload product its documentation and company procedures and be on the way to creating a customer service chatbot;

a developer could grab the complete set of Streamlit documentation to build an expert coder for data science apps (note that while the Streamlit documentation is open-sourced under the Apache 2 licence, you should always check licences and copyright to ensure that you have the appropriate rights and permissions to use data from sources other than your own);

you could upload web pages scraped from online newspapers and easily create a news aggregator/summariser (again, you must check copyright and licences/permissions if you intend to re-publish any data from external sources — for private use it’s often ok but you should always check that you are using data legally!);

a bird fancier might like to upload bird-related articles from Wikipedia and create a virtual ornithologist.

OK, that last example might seem a little niche but it is what we are going to develop as a demonstrator to show how the three elements can come together to create a specialist chatbot.

The thing is that although Streamlit is open source and lets you deploy apps for free on the Streamlit Community Cloud, OpenAI charges for using its LLMs, APIs and file storage. It’s not terrifically expensive to experiment with OpenAI but, so as not to break the bank, we aim to present a simple example of a specialist chatbot that only uses a small amount of data and will only cost a few cents to run. (If you haven’t already done so you can get a free trial of the OpenAI API that will cover the cost of running this application and much more.)

I explained the basics of Assistants API from OpenAI in the article How to Use the Powerful New Assistants API for Data Analysis. There I used a simple data file to power a data-aware chatbot with the demonstration code contained in a Jupyter Notebook. In this article, we will develop a web app with Streamlit that uses the Assistants API to read and analyse textual data to provide an ‘expert’ view of the information provided in that data.

I downloaded a random article from Wikipedia as a PDF — it’s about Australian Rock Parrots (data from Wikipedia is usable under the Creative Commons Attribution-ShareAlike License 4.0). We’ll use this as the knowledge base for our chatbot.

The file is about 7 pages long so not a vast resource but it will be sufficient to illustrate how we can build a chatbot using specialist text.

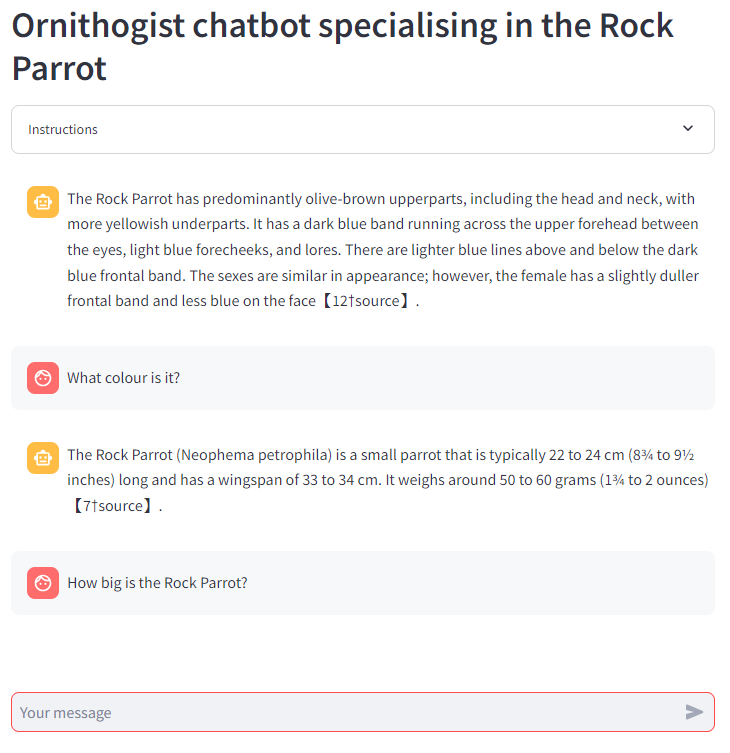

The screenshot below shows what the chatbot will look like. The conversation is listed in reverse chronological order, so the top of the list is the latest response and the second is the latest query.

I explored the Streamlit chat interface in the article ‘Using Streamlit’s Chat Elements: the Doctor is in’ which was based on Eliza, the early natural language processing computer program created from 1964 to 1966 at MIT by Joseph Weizenbaum, and this app uses the same UI elements.

Notice that the chatbot remembers what we are talking about. I first asked it something about the Rock Parrot and next, I simply referred to the bird as ‘it’. This memory of the conversation is a built-in function of the Assistants API, so we don’t need to write code to implement it as was the case with the ‘Doctor’ app (the conversation was saved to the Streamlit session state, in that app — no need here).

The Assistants API

The Assistants API comprises three main elements, assistants, threads and runs. Additionally, it utilises messages and tools. Below, is a short excerpt from the article How to Use the Powerful New Assistants API for Data Analysis that gives an overview of these various parts. If you are unfamiliar with the API and need more detail, please read the article or refer to the OpenAI Assistants documentation.

There are three main parts to the Assistants API:

Assistants:These are the starting point and they specify several aspects of the assistant: a model (e.g.gpt-4-1106-preview); instructions that inform the model about the type of behaviour we expect from it; tools such as the code interpreter and file retrieval; and files that we want to the model to use.

Threads:These represent the state of a conversation and will contain the messages that are generated both by the user and the assistant. A thread is not associated with an assistant until arunis started, rather it is a separate entity that will be used alongside an assistant during arun.

Runs:These control the execution of anassistantwith athread. Theruntakes the information in thethreadand theassistantand manages the interaction with the LLM.Runsare asynchronous and go through a number of steps before completion so we need to poll them to determine when they are complete. When therunis complete, we can then interrogate thethreadto see what response the assistant has come up with.In addition to these objects, we also utilise

messages. Amessagecan be a request from the user or a response from the LLM. Initially, it would be normal to create amessagewith a user request. This is then added to athreadbefore therunstarts. During the run, the LLM will have responded with newmessagesthat will have also been added to the thread and, as mentioned above, the responses can then be read from thethread.

The Chatbot app

In this app, we will be creating an assistant that uses the file retrieval tool (that allows the assistant to read text files) and arm it with the Rock Parrot PDF from Wikipedia. To create the chat interface that we see above we will build a Streamlit app that uses the st.chat_input and st.chat_message methods. The input method provides the user input field that we see at the bottom of the screenshot above, and the message elements use the role assigned to a message (either user or assistant) to display the messages, with an appropriate icon, above the input element.

Saving the session data

Apart from those UI elements, the screen contains a title and an expander element that displays the instructions for using the chatbot and prompts the user for a valid OpenAI API key. The app will not function without a key and once entered it is stored in the app’s session state.

st.session_state.key = st.text_input("API key")Once a key has been entered, we can initialise the app. In the code below, we see that we first create an OpenAI client and then an assistant and a thread as long as they do not already exist.

(Note that this approach is used so that this app can be deployed as a demonstrator using the user’s API key. If I were to be developing an app for real, I’d keep the API key as a Streamlit secret.)

### initialise session state

if 'key' not in st.session_state:

st.session_state.key = ""

# Check if the key has been set

if st.session_state.key != "":

# We have a key so create a client and an assistant

if 'client' not in st.session_state:

st.session_state.client = OpenAI(api_key = st.session_state.key)

if 'assistant' not in st.session_state:

create_assistant_and_thread(st.session_state.client)We need to utilise the session state to store the elements that the app relies on and we must test for their existence before setting them to accommodate the Streamlit run model.

When you first invoke it the entire app is executed, and each time a user input is detected (e.g. entering a key) then the app is re-run from the beginning. So, the state of the app — the instantiations of the client, assistant, thread, etc. — must be stored for those re-runs.

In the code above the assistant and thread are created by calling a function. Here is that function:

def create_assistant_and_thread(client):

# Create the assistant

# Upload a file

file = client.files.create(

file=open(

"Rock_parrot.pdf",

"rb",

),

purpose="assistants",

)

st.session_state.assistant = client.beta.assistants.create(

name = "Ornithologist",

instructions="You are ornithologist. Use your knowledge base to best respond to queries.",

model="gpt-4-1106-preview",

tools=[{"type": "retrieval"},{"type": "code_interpreter"}],

file_ids=[file.id],

)

st.session_state.thread = client.beta.threads.create()As you can see the file is read and used to create a file object. This is then incorporated into an assistant along with a name, instructions and a list of tools. I’ve specified both the retrieval and code_interpreter tools here because that gives the assistant access to more file types. We have hard-coded the PDF file in this application and the retrieval tool can deal with this, but if we wanted to access image files, CSVs or certain program files we would need the code_interpreter tool.

After creating the assistant, we create an empty thread. This will be used later to hold the messages from the user and the assistant.

The chat interface

The objects that we have just created are used in the chat interface as we can see in the code below. The user is prompted for input and this is used to create a message and that message is associated with the thread that we created earlier.

The thread and the assistant are then run on the model and, after waiting for the run to complete, the thread is interrogated for the messages that it contains. These messages will include the user prompt and the response from the assistant (and after more runs the thread will contain the entire history of prompts and responses).

We then loop over those messages, extracting the role (user or assistant) and the text of the message. These are then displayed with Streamlit’s chat_message message function.

if prompt := st.chat_input():

if "thread" in st.session_state:

message = st.session_state.client.beta.threads.messages.create(

thread_id=st.session_state.thread.id,

role="user",

content=prompt

)

run = st.session_state.client.beta.threads.runs.create(

thread_id=st.session_state.thread.id,

assistant_id=st.session_state.assistant.id,

)

run = wait_on_run(run, st.session_state.thread)

messages = st.session_state.client.beta.threads.messages.list(thread_id=st.session_state.thread.id)

for m in messages:

with st.chat_message(f"{m.role}"):

st.markdown(f"{m.content[0].text.value}")

else:

st.info("You need to provide an OpenAI API key to continue")And that is all that there is to it: initialise the assistant with instructions and file(s) for it to use; prompt the user for a query; run that query; and extract and display the responses.

I have only given you the main parts of the code here but you can download the complete app from my GitHub repository where you will also find the Rock Parrot PDF and other related files.

Dealing with references

As we know, LLMs are prone to hallucination: they invent plausible responses that are entirely fictional. Providing the model with the information it needs by adding user-provided files to the assistant is one way of mitigating this problem.

To reinforce this non-hallucinatory effect, the Assistant API includes references in its results. You can see one in the screenshot below.

Unfortunately, “7†source”, is not very helpful beyond letting us know that the information came from a provided source and has not been made up.

There is a sample code for interpreting these references in the OpenAI documentation, however, using that code would almost double the size of this app and, to be honest, looks like a bit of a kludge. So, for now, I am ignoring this feature and hoping that when the Assistants API comes out of beta, there will be a better method for dealing with references.

Whether you think that the Assistants API is a worthwhile development probably depends on the type of application that you are developing. I have written two types of app using the API: a Jupyter Notebook that acts as a data analyst and this one that implements a chatbot in Streamlit. In both cases, the app has been fairly easy to write and has produced the sort of result that we might expect.

One large caveat is that the files I used in these apps have been very small and the apps themselves are basic. It would be interesting to know how the response times might change if more, or larger, files were used.

All-in-all this does seem to me to be a good approach and I will be interested to see how the API develops after the beta stage. Thanks for reading and I hope that this has been useful.

You can find the code for both this article and the previous one in the data-assistant folder in my GitHub repository.

Please visit my website where you will find links to my other articles and links to books that have found useful.

All images and screenshots are by me, the author, unless otherwise noted. Apart from being a product user, I have no affiliation with any organisation mentioned in the article.