Full Article: How to Use the Powerful New Assistants API for Data Analysis

OpenAI’s Assistants API lets us create AI assistants which leverage tools that can operate on user-provided data

(Note: This post is a replacement for a previous one which was a summary and a link to the original article. This is the complete article.)

“The Assistants API allows you to build AI assistants within your own applications. An Assistant has instructions and can leverage models, tools, and knowledge to respond to user queries”, OpenAI.

Sounds great, so we are going to look at how we can use the new API to do data analysis on local data.

The Assistants API represents an approach that is an alternative to at least some uses of Retrieval Augmented Generation (RAG). So has RAG just been a stopgap measure, a temporary solution to the drawbacks of the current generation of LLMs? After all, LlamaIndex’s Jerry Liu has said that RAG is just a hack (albeit a powerful one).

Here are three specific problems inherent to LLMs that RAG currently addresses and that the Assistants API will also tackle:

LLMs are out of date. It takes a lot of time and money (not to mention energy) to train a model, so the information that they were trained on could be a couple of years old.

LLMs don’t know about your data. It is quite unlikely that your data were part of the training set for an LLM.

LLMs hallucinate. Sometimes they will give entirely plausible responses that are totally false.

By providing the LLM with data that is relevant to your application you can reduce these problems.

For example, if you want the LLM to produce Streamlit code, you could give it data from the latest documentation to enable it to use new features of the framework. Or, if you want to do some analysis on some specific data, then clearly giving it that data is essential. And, finally, by providing relevant data to the LLM, you increase the chance of it providing a suitable response and thus reduce the possibility of it just making things up.

While RAG has been used to mitigate these issues, they are now also addressed by the new Assistants API. The RAG approach makes queries to a locally provided vector database and extracts relevant data from it before passing it to the LLM. In contrast, this new API will allow you to upload your data so that the Assistant can operate with it directly.

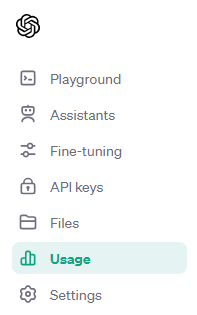

As an illustration of the potential use of the Assistants API, we are going to use it to perform some analysis on a (very) small data set. This could be done in the OpenAI Playground and you can see the interface for creating one in the screenshot below.

But we are going to be programming in Python so that we can see how to incorporate assistants in a standalone project.

Get an OpenAI account

If you don’t already have one, you’ll need an OpenAI account and an API key. Go to OpenAI.com and sign up.

Does this mean you are going to get charged?

Yes, I’m afraid so — but it’s not a lot. One of the reasons that we are going to use a small dataset is that it is a low-cost option.

Charges vary depending on the model you are using (GPT-3.5, GPT-4, etc) and the number of tokens that are generated, there is an additional fee for using the Assistants API, and you will also be charged for storing files (2 cents per gigabyte per day. Hint: don’t forget to delete files that you are not using, you can do it from your OpenAI dashboard or programmatically via the API).

There is generally a free trial that you can take advantage of — at the time of writing, I believe OpenAI is offering $5 worth of free usage which is much more than you will need to try out the code here.

My OpenAI free trial ran out long ago so I am now getting charged but the fees for running a small experiment like the one we are about to embark upon are not large. I created a Jupyter Notebook to use the API — with some of the code that we’ll see shortly — and even though it went through a few iterations, my bill was only a few 10s of cents.

You can keep an eye on the bill you are racking up in the Usage section of the OpenAI dashboard. There are also sections for Assistants and Files which you should also monitor and where you can delete individual items.

Get the SDK

If you have played around with the APIs in the past then you will already have the OpenAI SDK. However, you may need to upgrade it to use the Assistants API.

pip install --upgrade openai If you are starting from scratch just miss out the --upgrade option.

The Assistant API

Essentially the Assistant API gives the programmer tools to manage a specific type of interaction with an LLM. It defines the LLM model and sets its behaviour, stores an ongoing interaction with the LLM, and manages that interaction.

There are three main parts to the Assistants API:

Assistants:These are the starting point and they specify several aspects of the assistant: a model (e.g.gpt-4-1106-preview); instructions that inform the model about the type of behaviour we expect from it; tools such as the code interpreter and file retrieval; and files that we want to the model to use.Threads:These represent the state of a conversation and will contain the messages that are generated both by the user and the assistant. A thread is not associated with an assistant until arunis started, rather it is a separate entity that will be used alongside an assistant during arun.Runs:These control the execution of anassistantwith athread. Theruntakes the information in thethreadand theassistantand manages the interaction with the LLM.Runsare asynchronous and go through a number of steps before completion so we need to poll them to determine when they are complete. When therunis complete, we can then interrogate thethreadto see what response the assistant has come up with.

In addition to these objects, we also utilise messages. A message can be a request from the user or a response from the LLM. Initially, it would be normal to create a message with a user request. This is then added to a thread before the run starts. During the run, the LLM will have responded with new messages that will have also been added to the thread and, as mentioned above, the responses can then be read from the thread.

Let’s create an assistant

An assistant contains a textual name, instructions and a model (e.g. GPT4) and returns an object with an id that we can use to refer to the assistant. Our purpose here is to create an assistant that will help us analyse CSV data, so we give it a suitable name, “Data Assistant”, and provide instructions on how it should behave, “You are a data analyst who can analyse and interpret data files such as CSV and can draw conclusions from the data”, and the LLM model that we want to use. In this case, we need to use the Assistants-compatible model, gpt-4–1106-preview.

Here is the Python code for creating this assistant.

# Create an assistant and show what it looks like

assistant = client.beta.assistants.create(

name="Data Assistant",

instructions="You are a data analyst who can analyse and interpret data files such as CSV and can draw conclusions from the data",

model="gpt-4-1106-preview",

)The object that is returned has more attributes than we have given it. For example, it will have been assigned an id that we will need to use later.

# Given that we have created an assistant named 'assistant'

# we access its id thus:

id = assistant.idAlso, we can see all of the other attributes using a utility function from the OpenAI Cookbook¹.

import json

# This utility function uses the IPython function 'display' to pretty-print

# the JSON of an Assistants API object.

# This code is copied directly from the OpenAI Cookbook (MIT Licence).

def show_json(obj):

display(json.loads(obj.model_dump_json()))We can use this to look at the assistant that we have just created.

show_json(assistant)The result will look like the listing below.

{'id': 'asst_y9cljnz0u79cbsCJWYF2dDBr',

'created_at': 1705136202,

'description': None,

'file_ids': [],

'instructions': 'You are a data analyst who can analyse and interpret data files such as CSV and can draw conclusions from the data',

'metadata': {},

'model': 'gpt-4-1106-preview',

'name': 'Data Assistant',

'object': 'assistant',

'tools': []}We will be adding files and tools attributes to this later but this is all we need for the moment.

That is the starting point but to be able to do anything with the assistant we need to construct a thread.

Create a thread

A thread represents the state of a conversation and contains messages but initially, we just create an empty one. The code below returns an empty thread which which we can also view with the the utility function that we saw earlier.

thread = client.beta.threads.create()

show_json(thread){'id': 'thread_WT86WCsNuuVN7d111Mywi42Z',

'created_at': 1705136202,

'metadata': {},

'object': 'thread'}The next step is to create a user message and add it to the thread.

Add a message to a thread

A message will always be associated with a thread and we do this by using the thread id as one of the message attributes. We also assign a role. This will be the first message from the user so the role is ‘user’ and we also add the content of the message as a string. We haven’t provided any data for the LLM to work with, yet, so we are just going to ask it a simple question about Pandas.

message = client.beta.threads.messages.create(

thread_id=thread.id,

role="user",

content="I need to find the mean value of a column in a pandas dataframe, can you help",

)Now we have an assistant, a thread and we have added a message to the thread that represents our question to the LLM.

The next step is to run it and see how the LLM will respond.

Create (execute) a run from a thread and an assistant

To create a run we simply need to specify the assistant and the thread.

run = client.beta.threads.runs.create(

thread_id=thread.id,

assistant_id=assistant.id,

)Wait until the run is finished

A run will go through a number of steps before it provides a result and as we mentioned earlier, it is asynchronous so we have to poll its status to see when it has finished. The code below achieves that by retrieving the run and checking its status in a loop — and pausing for half a second before trying again. When the run is no longer queued or in_progress then we can take a look at how the LLM has responded.

The function below is from OpenAI Cookbook¹.

import time

# Poll a run until it has completed

# This code is copied directly from the OpenAI Cookbook (MIT Licence).

def wait_on_run(run, thread):

while run.status == "queued" or run.status == "in_progress":

run = client.beta.threads.runs.retrieve(

thread_id=thread.id,

run_id=run.id,

)

time.sleep(0.5)

return runBelow we use the function to wait for the run to complete.

run = wait_on_run(run, thread)So what is the result? We need to take a look a the messages.

Inspect the messages

We retrieve the messages from the thread and here we will find the response from the LLM. In the next piece of code, we get the messages and then print out the JSON of the messages object. The JSON is not very readable but we will do something about that.

messages = client.beta.threads.messages.list(thread_id=thread.id)

show_json(messages){'data': [{'id': 'msg_OE189P7ud3lgNx6XaBym0Twh',

'assistant_id': 'asst_Mqid9aVUOpaK3gLgmuHdFh7H',

'content': [{'text': {'annotations': [],

'value': 'Certainly! In order to calculate the mean value of a column in a pandas DataFrame, you can use the `.mean()` method. Here’s a step-by-step guide to doing just that:\n\nStep 1: First, ensure that you have pandas installed. If not, you can install it using `pip`:\n```bash\npip install pandas\n```\n\nStep 2: Import pandas in your Python script or notebook:\n```python\nimport pandas as pd\n```\n\nStep 3: Load your data into a pandas DataFrame. Assuming your data is in a CSV file, you can use the `read_csv` function:\n```python\ndf = pd.read_csv(\'path_to_your_csv_file.csv\')\n```\n\nStep 4: Calculate the mean of the desired column. Let’s say the column name is \'your_column_name\':\n```python\nmean_value = df[\'your_column_name\'].mean()\n```\n\nAnd that\'s it! The variable `mean_value` now holds the mean of the specified column.\n\nHere\'s an example with a complete snippet of code:\n\n```python\nimport pandas as pd\n\n# Load data\ndf = pd.read_csv(\'path_to_your_csv_file.csv\')\n\n# Calculate mean\nmean_value = df[\'your_column_name\'].mean()\n\n# Print the result\nprint(f"The mean value of the column \'your_column_name\' is {mean_value}")\n```\n\nJust replace `\'path_to_your_csv_file.csv\'` with the actual path to your CSV file, and `\'your_column_name\'` with the actual column name you\'re interested in.\n\nKeep in mind that if your column contains non-numeric data, you would first need to clean or convert the data to numeric types, as the mean function applies to numerical data only. Additionally, ensure that there are no `NaN` values or consider using `.mean(skipna=True)` to skip `NaN` values when computing the mean if your data includes missing values.'},

'type': 'text'}],

'created_at': 1705250906,

'file_ids': [],

'metadata': {},

'object': 'thread.message',

'role': 'assistant',

'run_id': 'run_dQknLUHs0DuERN7QnmfO9nZk',

'thread_id': 'thread_TVLDDcv8ZWAaKTbnAhBwjRJI'},

{'id': 'msg_KIKyi9JoGPI88LqAAU0OS5ju',

'assistant_id': None,

'content': [{'text': {'annotations': [],

'value': 'I need to find the mean value of a column in a pandas dataframe, can you help'},

'type': 'text'}],

'created_at': 1705249356,

'file_ids': [],

'metadata': {},

'object': 'thread.message',

'role': 'user',

'run_id': None,

'thread_id': 'thread_TVLDDcv8ZWAaKTbnAhBwjRJI'}],

'object': 'list',

'first_id': 'msg_OE189P7ud3lgNx6XaBym0Twh',

'last_id': 'msg_KIKyi9JoGPI88LqAAU0OS5ju',

'has_more': False}The messages are recorded in reverse chronological order, i.e. the first message is the last one to be generated. Note that there are two messages in the JSON above (they start with the field id). The first is the response from the LLM and has the role assistant. The second one is the user message that the LLM should respond to: “I need to find…”.

It’s not easy to read because the text of the message is all on one line. To solve the readability problem we can use another function from the OpenAI Cookbook¹.

# Pretty printing helper from the OpenAI Cookbook

def pretty_print(messages):

print("# Messages")

for m in messages:

print(f"{m.role}: {m.content[0].text.value}")

print()If we use that function on the returned messages…

pretty_print(messages)…we get a much easier-to-read result:

# Messages

assistant: Certainly! In order to calculate the mean value of a column in a pandas DataFrame, you can use the `.mean()` method. Here’s a step-by-step guide to doing just that:

Step 1: First, ensure that you have pandas installed. If not, you can install it using `pip`:

```bash

pip install pandas

```

Step 2: Import pandas in your Python script or notebook:

```python

import pandas as pd

```

Step 3: Load your data into a pandas DataFrame. Assuming your data is in a CSV file, you can use the `read_csv` function:

```python

df = pd.read_csv('path_to_your_csv_file.csv')

```

Step 4: Calculate the mean of the desired column. Let’s say the column name is 'your_column_name':

```python

mean_value = df['your_column_name'].mean()

```

And that's it! The variable `mean_value` now holds the mean of the specified column.

Here's an example with a complete snippet of code:

```python

import pandas as pd

# Load data

df = pd.read_csv('path_to_your_csv_file.csv')

# Calculate mean

mean_value = df['your_column_name'].mean()

# Print the result

print(f"The mean value of the column 'your_column_name' is {mean_value}")

```

Just replace `'path_to_your_csv_file.csv'` with the actual path to your CSV file, and `'your_column_name'` with the actual column name you're interested in.

Keep in mind that if your column contains non-numeric data, you would first need to clean or convert the data to numeric types, as the mean function applies to numerical data only. Additionally, ensure that there are no `NaN` values or consider using `.mean(skipna=True)` to skip `NaN` values when computing the mean if your data includes missing values.

user: I need to find the mean value of a column in a pandas dataframe, can you helpYou still ideal but we can see much more easily that the LLM has responded with the sort of message that you might get from the web version of ChatGPT.

As a matter of interest, running the code that we have seen so far just once landed me with a bill from OpenAI of a massive 1 cent!

We could add more messages, run them and simulate a ChatGPT web session but the focus of this article is to look at how we can get the assistant to analyse data. So let’s move on to that.

Add the code interpreter tool

We aim to upload a CSV file and enable the assistant to read and analyse it and to do this we need to provide the assistant with the appropriate tool.

Currently, there are three types of OpenAI-hosted tools available: Code Interpreter, Knowledge Retrieval and Function calling. Knowledge Retrieval is aimed at reading text files (although these could be program code) while the Code Interpreter can read images, zip files, certain programming languages that Knowledge Retrieval cannot cope with and CSV files. Function calling is not something that concerns us here but we may return to it another day.

So, we need the Code Interpreter.

assistant = client.beta.assistants.update(

assistant.id,

tools=[{"type": "code_interpreter"}],

)

show_json(assistant){'id': 'asst_y9cljnz0u79cbsCJWYF2dDBr',

'created_at': 1705136202,

'description': None,

'file_ids': [],

'instructions': 'You are a data analyst who can analyse and interpret data files such as CSV and can draw conclusions from the data',

'metadata': {},

'model': 'gpt-4-1106-preview',

'name': 'Data Assistant',

'object': 'assistant',

'tools': [{'type': 'code_interpreter'}]}The code above adds the tool and you can see that the assistant has been updated to include it.

This gives us the capability to read and interpret CSV files so now we need to provide the file.

Add a file

The file that we are going to use is a very simple CSV table of EU countries and their populations. It is assumed to be in the same folder as the notebook and it looks like this:

(As I mentioned earlier, this file is deliberately small because we don’t want to land ourselves with a large usage bill for this simple experiment.)

We do a conventional file read and then add the file to the assistant (note that the assistant requires the file to be open in binary mode).

# Upload the file

file = client.files.create(

file=open(

"europop.csv",

"rb",

),

purpose="assistants",

)

# Update Assistant

assistant = client.beta.assistants.update(

assistant.id,

tools=[{"type": "code_interpreter"}],

file_ids=[file.id],

)

show_json(assistant){'id': 'asst_Mqid9aVUOpaK3gLgmuHdFh7H',

'created_at': 1705249345,

'description': None,

'file_ids': ['file-2dDVMRoUs7Kj0s2rnhcthlun'],

'instructions': 'You are a data analyst who can analyse and interpret data files such as CSV and can draw conclusions from the data',

'metadata': {},

'model': 'gpt-4-1106-preview',

'name': 'Data Assistant',

'object': 'assistant',

'tools': [{'type': 'code_interpreter'}]}We can see now that the assistant has been updated with a file id, so let’s give it a task.

# Create a message to append to our thread

message = client.beta.threads.messages.create(

thread_id=thread.id,

role="user",

content="""Please list the names, populations and percentage of the total, of the countries

in order of descending population size?"""

)

# Execute our run

run = client.beta.threads.runs.create(

thread_id=thread.id,

assistant_id=assistant.id,

)

# Wait for completion

wait_on_run(run, thread)

# Retrieve all the messages added after our last user message

messages = client.beta.threads.messages.list(

thread_id=thread.id,

order="asc",

after=message.id

)

show_json(messages)The code above should look familiar: we create a new message that asks the LLM to list the countries in order of population and to display that population and its percentage of the total of the countries. It’s not a difficult task for a data scientist or programmer but would require a little effort.

Next, we run the query and retrieve the messages.

Below is the pretty-printed version of the output.

# Messages

assistant: To achieve this, we will need to take the following steps:

1. Load the data from the uploaded file into a pandas DataFrame.

2. Identify the relevant columns for countries, populations, and calculate the percentages.

3. Sort the DataFrame in descending order based on the population size.

4. Calculate the percentage of the total for each country.

5. Display the resulting DataFrame or list.

Let's start by loading the data and looking at the first few rows to identify the columns we need. I'll proceed with loading the CSV file and displaying its head.

assistant: The file has been successfully loaded, and it appears to contain columns for the country names ('Country') and their respective populations ('Population (2021)').

Now, let's proceed with the following steps:

1. Sort the DataFrame based on 'Population (2021)' in descending order.

2. Calculate the percentage of the total population for each country.

3. List the names, populations, and percentages of the total in the sorted order.

assistant: Here is the list of countries with their populations and the percentage of the total population, in descending order of population size:

| Rank | Country | Population (2021) | Percentage of Total |

|-------:|--------------|-------------------:|--------------------:|

| 1 | Germany | 83,408,560 | 18.74% |

| 2 | France | 64,531,448 | 14.50% |

| 3 | Italy | 59,240,336 | 13.31% |

| 4 | Spain | 47,486,932 | 10.67% |

| 5 | Poland | 38,307,724 | 8.61% |

| 6 | Romania | 19,328,558 | 4.34% |

| 7 | Netherlands | 17,501,696 | 3.93% |

| 8 | Belgium | 11,611,416 | 2.61% |

| 9 | Czechia | 10,510,748 | 2.36% |

| 10 | Sweden | 10,467,095 | 2.35% |

| 11 | Greece | 10,445,368 | 2.35% |

| 12 | Portugal | 10,290,109 | 2.31% |

| 13 | Hungary | 9,709,784 | 2.18% |

| 14 | Austria | 8,922,086 | 2.00% |

| 15 | Bulgaria | 6,885,864 | 1.55% |

| 16 | Denmark | 5,854,246 | 1.32% |

| 17 | Finland | 5,535,982 | 1.24% |

| 18 | Slovakia | 5,447,621 | 1.22% |

| 19 | Ireland | 4,986,525 | 1.12% |

| 20 | Croatia | 4,060,139 | 0.91% |

| 21 | Lithuania | 2,786,652 | 0.63% |

| 22 | Slovenia | 2,119,408 | 0.48% |

| 23 | Latvia | 1,873,926 | 0.42% |

| 24 | Estonia | 1,328,704 | 0.30% |

| 25 | Cyprus | 1,244,193 | 0.28% |

| 26 | Luxembourg | 639,330 | 0.14% |

| 27 | Malta | 526,751 | 0.12% |

This table lists each country, its population in 2021, and the percentage that population represents of the total of all countries listed. Please note that the percentages are rounded to two decimal places for readability.

Again it is exactly the type of thing that a web-based chat might produce and shows that the LLM certainly does understand the data and how to manipulate it.

That’s about it for the code but you might want to try different prompts to see how the LLM responds; just change the content to the question that you would like answered.

Temperatures, prompts and variable responses

You may well get slightly different responses when running the code multiple times. It will contain the same data but it might be formatted differently. Different runs that I have made have sometimes given explanations and sometimes not, or the list of countries and populations might be just that, a list, or as we have seen above, a simple table.

The likely reason is twofold.

Firstly, I have not put a great deal of thought into the prompts that I’ve created for this experiment, so with a little prompt engineering you might be able to improve the responses from the LLM — you could be specific about the way you want the response formatted, for example.

Secondly, we cannot set the temperature parameter for the LLM. This temperature is essentially a measure of the randomness of the LLM’s response — a high temperature gives varied responses and is good for creative work, whereas a low temperature reduces the variation as you might want for code generation, for example.

It’s possible that setting the temperature might be an option in a later release (see this discussion) but for the moment we are stuck with what we have been given.

Towards an application

This has been a simple, bare-bones demonstration of data analysis with the Assistants API. With such a small dataset, it could easily be duplicated with ChatGPT or pretty much any other LLM-based chat service.

But this technique could be used to deal with much larger files or, indeed, many files. The file upload limit for any one organisation is 100GB and individual files cannot be more than 2 million tokens — a token is around 4 characters long, or 3/4 of a word.

Of course, the API is aimed at powering applications and one can easily imagine a Streamlit app, say, that incorporates assistants into a chat interface.

Such an app might be a tool to give responses to a dataset that is relevant to a particular client — sales data, for example — allowing the client to ask questions about sales over a particular period. Or an app could contain fixed prompts that are selected via a menu of options, or perhaps allow the client to upload their own data.

Developing a Streamlit app is not something that would fit here but might well be the subject of another article.

I hope you have found this experiment useful and thanks for reading. The code and data are available in a GitHub repo where you can download them.

The downloadable notebook contains more code than you see above but still only costs a few 10s of cents to run. However, make sure you monitor your usage on your OpenAI dashboard — it is very easy to lose track of how many times you have hit the ‘run’ button. And make sure that you delete any files that you upload when you have finished with them. You don’t want to use up your free trial quota unnecessarily.

If you would like to see more of my work you can visit my website, or subscribe to this Substack.

Notes

The GitHub repository for the Assistants Overview section of the OpenAI Cookbook can be found here. Parts of the code in this article were derived from the code in the Cookbook. The code is distributed under the MIT licence and was accessed in January 2024.

All images and screenshots are by me, the author, unless otherwise indicated.